EMBOTICS

Embotics is now part of Snow Software

The Snow Software acquisition of Embotics brings two multi-year Gartner Magic Quadrant Leaders together, combining deep global expertise in IT Asset Management and Cloud Management under one roof, to enable modern IT organizations and service providers to leverage a single provider to manage complex hybrid IT environments.

The same great product. Now, with the power of Snow.

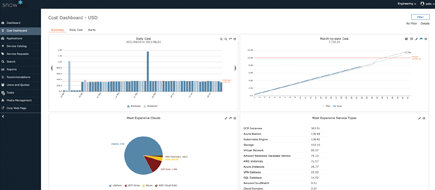

Snow Commander

Snow Commander is a hybrid cloud management platform that uses automation to provide end users with quick and easy access to both public and private cloud resources. With Snow Commander, organizations can simplify cloud management by implementing brokered, self-service access to resources that speeds provisioning, boosts agility and integrates seamlessly with your existing tech.

What We Offer

Solutions to help modern infrastructure & operations teams decrease the challenges of hybrid and multi-cloud management.

Self-Service IT

Transform your IT department

into an agile MSP by enabling

automated, self-service delivery.

Cloud Automation & Orchestration

Fully automate hybrid cloud

service delivery to deliver IT resources

to end users over 100x faster.

Governance

Meet the specific governance

needs of your business with

a hybrid cloud management tool.

Solutions for Managed Service Providers

Take your managed service

practice to the next level with

hybrid cloud management tooling.

Having fewer manual intervention steps to deploy and decommission virtual machines, along with the simplicity of the interface itself, is what makes Snow Commander stand out from the competition. And when we do need to contact support, we get a reply in a very short amount of time telling us what our expectations are for that case. I’m super-impressed with the support we get.